For academic scientists, publications are the primary currency for success, and so peer review is a central part of scientific life. When discussing peer review, it’s always worth remembering that since it depends on ‘peers’, broader issues across ecology are often reflected in issues with peer review. A series of papers from Charles W. Fox--and coauthors Burns, Muncy, and Meyer--do a great job of illustrating this point, showing how diversity issues in ecology are writ small in the peer review process.

The journal

Functional Ecology provided the authors up to 10 years of data on the submission, editorial, and review process (between 2004 and 2014, maximum). This data provides a unique opportunity to explore how factors such as gender and geographic local affects the peer review process and outcomes, and also how this has changed over the past decade.

Author and reviewer gender were assigned using an online database (genderize.io) that includes 200,000 names and an associated probability reflecting the genders for each name. Geographic location of editors and reviewers were also identified based on their profiles. There are some clear limitations to this approach, particularly that Asian names had to be excluded. Still, 97% of names were present in the genderize.io database, and 94% of those names were associated with a single gender >90% of the time.

Many—even most—of Fox et al.’s findings are in line with what has already been shown regarding the causes and effects of gender gaps in academia. But they are interesting, nonetheless. Some of the gender gaps seem to be tied to age: senior editors were all male, and although females make up 43% of first authors on papers submitted to Functional Ecology, they are only 25% of senior authors.

Implicit biases in identifying reviewers are also fairly common: far fewer women were suggested then men, even when female authors or female editors were identifying reviewers. Female editors did invite more female reviewers than male editors. ("Male editors selected less than 25 percent female reviewers even in the year they selected the most women, but female editors consistently selected ~30–35 percent female"). Female authors also suggested slightly more female reviewers than male authors did.

Some of the statistics are great news: there was no effect of author gender or editor gender on how papers were handled and their chances of acceptance, for example. Further, the mean score given to a paper by male and female reviewers did not differ – reviewer gender isn’t affecting your paper’s chance of acceptance. And when the last or senior author on a paper is female, a greater proportion of all the authors on the paper are female too.

The most surprising statistic, to me, was that there was a small (2%) but consistent effect of handling editor gender on the likelihood that male reviewers would respond to review requests. They were less likely to respond and less likely to agree to review, if the editor making the request is female.

That there are still observable effects of gender in peer review despite an increasing awareness of the issue should tell us that the effects of other forms of less-discussed bias are probably similar or greater. Fox et al. hint at this when they show how important the effect of geographic locale is on reviewer choice. Overwhelmingly editors over-selected reviewers from their own geographic locality. This is not surprising, since social and professional networks are geographically driven, but it can have the effect of making science more insular. Other sources of bias – race, country of origin, language – are more difficult to measure from this data, but hopefully the results from these papers are reminders that such biases can have measurable effects.

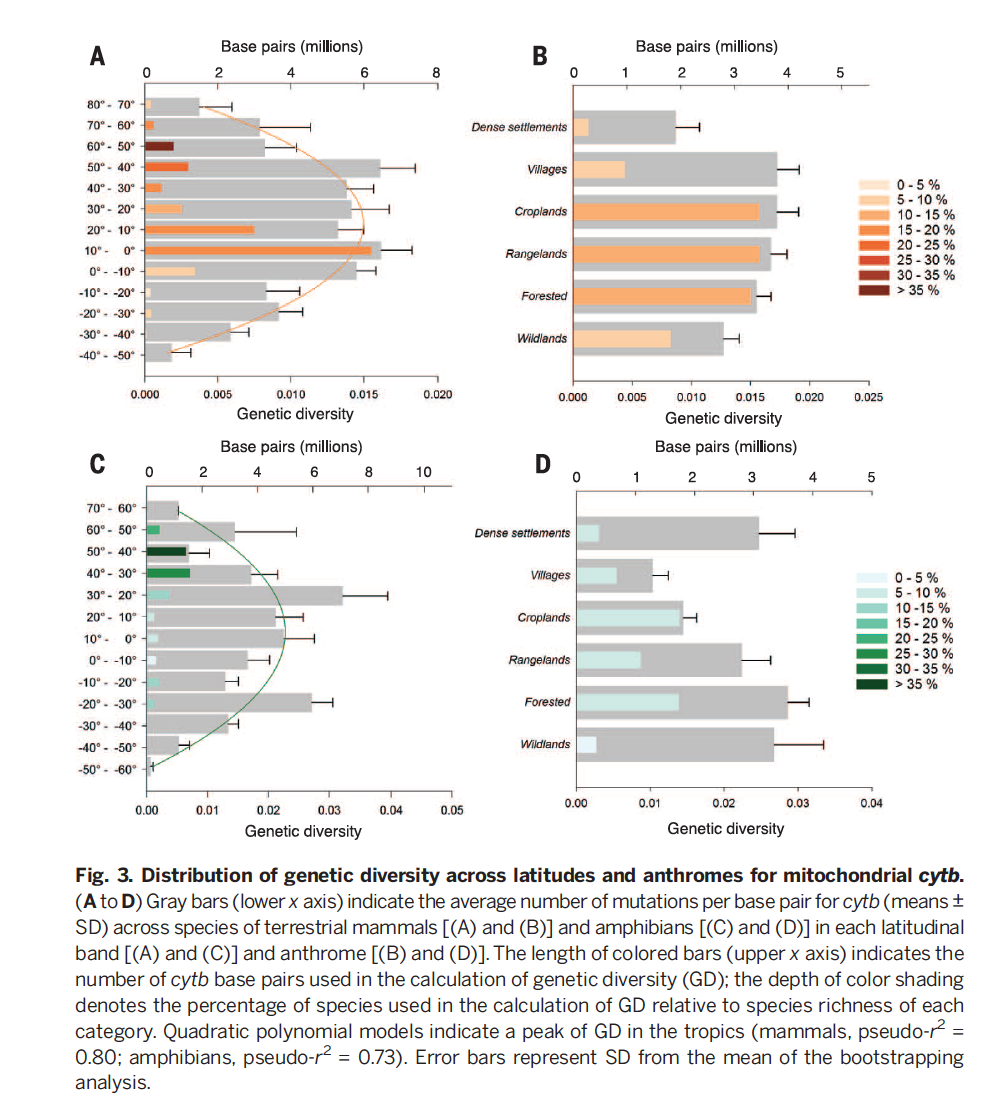

|

| From Fox et al. 2016a. |

References:

Fox, C. W., Burns, C. S., Muncy, A. D. and Meyer, J. A. (2016), Gender differences in patterns of authorship do not affect peer review outcomes at an ecology journal. Funct Ecol, 30: 126–139. doi:10.1111/1365-2435.12587

Fox, C. W., Burns, C. S., Meyer, J. A. (2016), Editor and reviewer gender influence the peer review process but not peer review outcomes at an ecology journal. Functional Ecology, 30: 140–153. doi: 10.1111/1365-2435.12529