This is a guest post by Aspen Reese, a graduate student at Duke University, who in addition to studying the role of trophic interactions in driving secondary succession, is interested in how ecological communities are defined. Below she explains one possible way to explicitly define communities, although it's important to note that communities must explicitly be networks for the below calculations.

Because there are so many different ways of defining “community”, it can be hard to know what, exactly, we’re talking about when we use the term. It’s clear, though, that we need to take a close look at our terminology.

In her recent post, Caroline Tucker offers a great overview of why this is such an important conversation to have. As she points out, we aren’t always completely forthright in laying out the assumptions underlying the definition used in any given study or subdiscipline. The question remains then: how to function—how to do and how to communicate good research—in the midst of such a terminological muddle?

We don’t need a single, objective definition of community (could we ever agree? And why should we?). What we do need, though, are ways to offer transparent, rigorous definitions of the communities we study. Moreover, we need a transferable system for quantifying these definitions.

One way we might address this need is to borrow a concept from the philosophy of biology, called entification. Entification is a way of quantifying thingness. It allows us to answer the question: how much does my study subject resemble an independent entity? And, more generally, what makes something an entity at all?

Stanley Salthe (

1985) gives us a helpful definition: Entities can be defined by their boundaries, degree of integration, and continuity (Salthe also includes scale, but in a very abstract way, so I’ll leave that out for now). What we need, then, is some way to quantify the boundedness, integration, and continuity of any given community. By conceptualizing the community as an ecological network*—with a population of organisms (nodes) and their interactions (edges)—that kind of quantification becomes possible.

Consider the following framework:

Boundedness

Communities are discontinuous from the environment around them, but how discrete that boundary is varies widely. We can quantify this discreteness by measuring the number of nodes that don’t have interactions outside the system relative to the total number of nodes in the system (Fig. 1a).

Boundedness =

(Total nodes without external edges)/(Total nodes)

Integration

Communities exhibit the interdependence and connections of their parts—i.e. integration. For any given level of complexity (which we can define as the number of constitutive part types, i.e. nodes (McShea 1996)), a system becomes more integrated as the networks and feedback loops between the constitutive part types become denser and the average path length decreases. Therefore, degree of integration can be measured as one minus the average path length (or average distance) between two parts relative to the total number of parts (Fig. 1b).

Integration =

1-((Average path length)/(Total nodes))

Continuity

All entities endure, if only for a time. And all entities change, if only due to entropy. The more similar a community is to its historical self, the more continuous it is. Using networks from two time points, a degree of continuity is calculated with a Jaccard index as the total number of interactions unchanged between both times relative to the total number of interactions at both times (Fig. 1c).

Continuity =

(Total edges-changed edges)/(Total edges)

|

| Fig 1. The three proposed metrics for describing entities—(A) boundedness, (B) integration, and (C) continuity—and how to calculate them. |

Let’s try this method out on an arctic stream food web (

Parker and Huryn 2006). The stream was measured for trophic interactions in June and August of 2002 (Fig. 2). If we exclude detritus and consider the waterfowl as outside the community, we calculate that the stream has a degree of boundeness of 0.79 (i.e. ~80% of its interactions are between species included in the community), a degree of integration of 0.98 (i.e. the average path length is very close to 1), and a degree of continuity of 0.73 (i.e. almost 3/4 of the interactions are constant over the course of the two months). It’s as easy as counting nodes and edges—not too bad! But what does it mean?

|

| Fig. 2: The food web community in an arctic stream over summer 2002. Derived from Parker and Huryn (2006). |

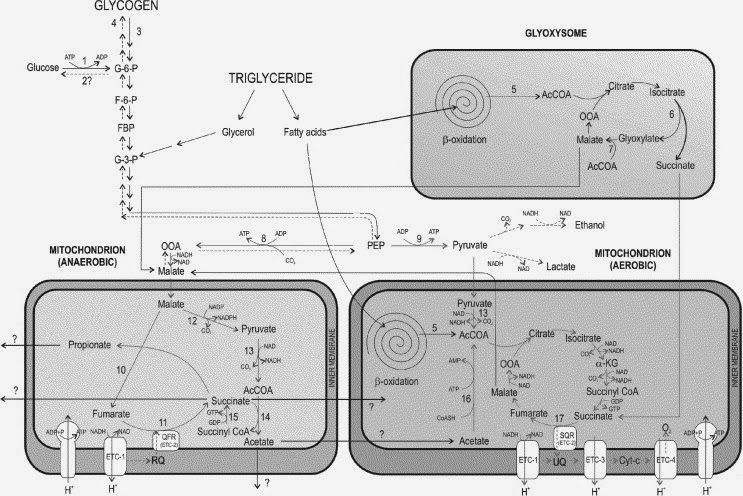

Well, compare the arctic stream to a molecular example. Using a simplified network (

Burnell et al. 2005), we can calculate the entification of the cellular respiration pathway (Fig. 3). We find that for the total respiration system, including both the aerobic and anaerobic pathways, boundedness is 0.52 and integration is 0.84. The continuity of the system is likely equal to 1 at most times because both pathways are active, and their makeup is highly conserved. However, if one were to test for the continuity of the system when it switches between the aerobic and the anaerobic pathway, the degree of continuity drops to 0.6.

|

| Fig. 3: The anaerobic and aerobic elements of cellular respiration, one part of a cell’s metabolic pathway. Derived from Burnell et al. (2005) |

Contrary to what you might expect, the ecological entity showed greater integration than the molecular pathway. This makes sense, however, since molecular pathways are more linear, which increases the average shortest distance between parts, thereby decreasing continuity. In contrast, the continuity of molecular pathways can be much higher when considered in aggregate. In general, we would expect the boundedness score for ecological entities to be fairly low, but with large variation between systems. The low boundedness score of the molecular pathway is indicative of the fact that we are only exploring a small part of the metabolic pathway and including ubiquitous molecules (e.g. NADH and ATP).

Here are three ways such a system could improve community ecology: First, the process can highlight interesting ecological aspects of the system that aren’t immediately obvious. For example, food webs display much higher integration when parasites are included, and a recent call (

Lafferty et al. 2008) to include these organisms highlights how a closer attention to under-recognized parts of a network can drastically change our understanding of a community. Or consider how the recognition that islands, which have clear physical boundaries, may have low boundedness due to their reliance on marine nutrient subsidies (

Polis and Hurd 1996) revolutionized how we study them. Second, this methodology can help a researcher find a research-appropriate, cost-effective definition of the study community that also maximizes its degree of entification. A researcher could use sensitivity analyses to determine what effect changing the definition of her community would have on its characterization. Then, when confronted with the criticism that a certain player or interaction was left out of her study design, she could respond with an informed assessment of whether the inclusion of further parts or processes would actually change the character of the system in a quantifiable way. Finally, the formalized process of defining a study system will facilitate useful conversation between researchers, especially those who have used different definitions of communities. It will allow for more informed comparisons between systems that are similar in these parameters or help indicate a priori when systems are expected to differ strongly in their behavior and controls.

Communities, or ecosystems for that matter, aren’t homogeneous; they don’t have clear boundaries; they change drastically over time; we don’t know when they begin or end; and no two are exactly the same (see

Gleason 1926). Not only are communities unlike organisms, but it is often unclear whether or not communities or ecosystems are units of existence at all (

van Valen 1991). We may never find a single objective definition for what they are. Nevertheless, we work with them every day, and it would certainly be helpful if we could come to terms with their continuous nature. Whatever definition you choose to use in your own research—make it explicit and make it quantifiable. And be willing to discuss it with your peers. It will make your, their, and my research that much better.