The first in a series of guest posts about using scientific teaching, active learning, and flipping the classroom by Sarah Seiter, a teaching fellow at the University of Colorado, Boulder.

As a faculty member teaching can sometimes seem like a chore – your lectures compete with smartphones and laptops. Some students see themselves as education “consumers” and haggle over grades. STEM (science, technology, engineering, and math) faculty have a particularly tough gig – students need substantial background to succeed in these courses, and often arrive in the classroom unprepared. Yet, the current classroom climate doesn’t seem to be working for students either. About half of STEM college majors ultimately switch to a non-scientific field. It would be easy to frame the problem as one of culture – and we do live in a society that doesn’t always value science or education. However, the problem of reforming STEM education might not take social change, but rather could be solved using our own scientific training. In the past few years a movement called “scientific teaching” has emerged, which uses quantitative research skills to make the classroom experience better for instructors as well as students.

So how can you use your research skills to boost your teaching? First, you can use teaching techniques that have been empirically tested and rigorously studied, especially a set of techniques called “active learning”. Second, you can collect data on yourself and your students to gauge your progress and adjust your teaching as needed, a process called “formative assessment”. While this can seem daunting, it helps to remember that as a researcher you’re uniquely equipped to overhaul your teaching, using the skills you already rely on in the lab and the field. Like a lot of

paradigm shifts in science, using data to guide your teaching seems pretty obvious after the fact, but it can be revolutionary for you and your students.

What is Active Learning:

There are a lot of definitions of active learning floating around, but in short active learning techniques force students to engage with the material, while it is being taught. More importantly, students practice the material and make mistakes while they are surrounded by a community of peers and instructors who can help. There are a lot of ways to bring active learning strategies to your classroom, such as clicker response systems (handheld devices that allow them to take short quizzes throughout the lecture). Case studies are another tool: students read about scientific problems and then apply the information to real world problems (medical and law schools have been them for years). I’ll get into some more examples of these techniques in post II; there are lots of free and awesome resources that will allow you to try active learning techniques in your class with minimal investment.

Formative Assessment:

The other way data can help you overhaul your class is through formative assessment, a series of small, frequent, low stakes assessment of student learning. A lot of college courses use what’s called summative assessment – one or two major exams that test a semester’s worth of material, with a few labs or a term paper for balance. If your goal is to see if your students learned anything over a semester this is probably sufficient. This is also fine if you’re trying to weed out underperforming students from your major (

but seriously, don’t do that). But if you’re interested in coaching students towards mastery of the subject matter, it probably isn’t enough to just tell them how much they learned after half the class is over. If you think about learning goals like we think of fitness goals, this is like asking students to qualify for the Boston marathon, without giving them any times for their training runs.

Formative assessment can be done in many ways: weekly quizzes or taking data with classroom clicker systems. While a lot of formative assessment research focuses on measuring student progress, instructors have lots to gain by measuring their own pedagogical skills. There are a lot of tools out there to measure improvement in teaching skills (K-12 teachers have been getting formatively assessed for years), but even setting simple goals for yourself (“make at least 5 minutes for student questions”) and monitoring your progress can be really helpful. Post III will talk about how to do (relatively) painless formative assessment in your class.

How does this work and who does it work for:

Scientific teaching is revolutionary because it works for everyone, faculty and students alike. However, it has particularly useful benefits for some types of instructors and students.

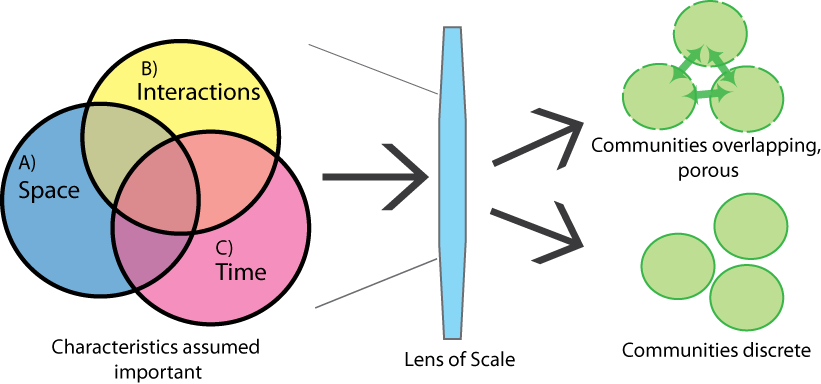

New Faculty: inexperienced faculty can achieve results as good or better than experienced faculty by using evidence based teaching techniques. In a

study at the University of Colorado, physics students taught by a graduate TA using scientific teaching outperformed those taught by an

experienced (and well loved) professor using a standard lecture style (you can read the study here). Faculty who are not native English speakers, or who are simply shy can get a lot of leverage using scientific teaching techniques, because doing in-class activities relieves the pressure to deliver perfect lectures.

|

Test scores between a lecture-taught physics section

and a section taught using active learning techniques. |

Seasoned Faculty: For faculty who already have their teaching style established, scientific teaching can spice up lectures that have become rote or help you address concepts that you see students struggle with year after year. Even if you feel like you have your lectures completely dialed in, consider whether you’re using the most cutting edge techniques in your lab, and if you your classroom deserves the same treatment.

Students also stand to gain from scientific teaching, and some groups of students are particularly poised to benefit from it:

Students who don’t plan to go into science: Even in majors classes, most of the students we teach won’t go on to become scientists. But skills like analyzing data, and writing convincing evidence based arguments are useful in almost any field. Active learning trains students to be smart consumers of information, and formative assessment teaches students to monitor their own learning – two skills we could stand to see more of in any career.

Students Who Love Science: Active learning can give star students a leg up on the skills they’ll need to succeed as academics, for all the reasons listed above. Occasionally really bright students will balk at active learning, because having to wrestle with complicated data makes them feel stupid. While it can feel awful to watch your smartest students struggle, it is important to remember that real scientists have to confront confusing data every day. For students who want research careers, learning to persevere through messy

and inconclusive results is critical.

Students who struggle with science: Active learning can be a great leveler for students who come from disadvantaged backgrounds.

A University of Washington study showed that active learning and student peer tutoring could eliminate achievement gaps for minority students. If you partially got into academia because you wanted to make a difference in educating young people, here is one empirically proven way to do that.

Are there downsides?

Like anything, active learning involves tradeoffs. While the overwhelming evidence suggests that active learning is the best way to train new faculty (

the white house even published a report calling for more of it!), there are sometimes roadblocks to scientific teaching.

Content Isn’t King Anymore: Taking time to work with data, or apply scientific research to policy problems takes more time, so instructors can cover fewer examples in class. In active learning, students are developing scientific skills like experimental design or technical writing, but after spending an hour hammering out an experiment to test the evolution of virulence, they often feel like they’ve only learned about “one stupid disease”. However, there is lots of evidence that covering topics in depth is more beneficial than doing a survey of many topics. For example, high schoolers that studied a single subject in depth for more than a month were more likely to declare a science major in college than students who covered more topics.

Demands on Instructor Time: I actually haven’t found that active learning takes more time to prepare –case studies and clickers actually take a up a decent amount of class time, so I spend less time prepping and rehearsing lectures. However, if you already have a slide deck you’ve been using for years, developing clicker questions and class exercises requires an upfront investment of time. Formative assessment can also take more time, although online quiz tools and peer grading can help take some of the pressure off instructors.

If you want to learn more about the theory behind scientific teaching there are a lot of great resources on the subject:

These podcasts are a great place to start:

http://americanradioworks.publicradio.org/features/tomorrows-college/lectures/

http://www.slate.com/articles/podcasts/education/2013/12/schooled_podcast_the_flipped_classroom.html

This book is a classic in the field:

http://www.amazon.com/Scientific-Teaching-Jo-Handelsman/dp/1429201886